Growth Experiments for AI Founders

Running experiments (think A/B tests) is usually not the first choice for founders. Leveraging personal networks, blasting lead lists, and being active on socials like Linkedin and X are usually the go-to's. However, ever since I learned about the "rule of 72", I can't help but think that experiments are an essential tool even at the early stages of a startup.

I first learned about the "rule of 72" while working at Point72 Asset Management. The concept states that you can determine the number of periods t required to double an investment if you divide 72 by your growth rate r. (see the wiki page for more context)

This concept also applies to AI founders as they punch through walls to make growth happen.

As AI founders, we are under immense pressure to achieve rapid growth because of the opportunity to disrupt SaaS incumbents. YC believes there will be many billion dollar startups that emerge from the AI platform shift. With the large influx of venture dollars betting on AI startups, greenfield opportunities are starting to get crowded with new competitors in 2025. Markets like developer tools, sales, and customer support are already heavily saturated.

And the competition is smart. There are more resources than ever before on solving product market fit (I've especially enjoyed First Round's PMF method) and hungry founders are willing to put in more hours than ever before. The AI startup frenzy has led to new regimes where it's not enough to just solve the idea maze, founders have to solve the growth maze as well.

This is why I think founders should care about experiments:

Consider the following scenario

If you acquire 10 new customers a week, discovering a repeatable insight to increase customer acquisition by 5% on average per week leads to doubling weekly customer acquisition in less than 4 months. (72 divided by 5% growth per week is 14.4 weeks)

Running intentional experiments yielding 5% weekly average improvements results in 2,329 customers acquired over 1 year compared to 520, an order of magnitude difference.

YES, acquiring 10 customers consistently per week is not realistic. There are ups and downs. You acquire customers, you benefit from network effects, you increase your product's ability to solve problems and that increases growth. However, running experiments is practically a freebie ON TOP of your natural growth trajectory. Most importantly, running experiments tells you what actually moves the needle on growth which is critical for repeatable growth.

This practice allows you to identify the levers that provably result in better outcomes for you specifically and best of all, are things you can control when it comes to growth.

This sounds like a lot of work, yikes

It's not. I can justify it as a solo founder which means you can do it too - you just need to know how to make it stupid simple and efficient.

The answer is simply: 100.

100 as in the number of people per experiment group.

As a founder, I found this to work well so far because for funnel sections with low conversion rates, you're generally looking for the bigger wins. Then, for funnel conversion rates closer to 50-60%, you're happy to see 30-40% relative improvements.

Keep it simple, it's a 3-step process

- Pick 1 intervention to focus on that drives customer acquisition.

- Run your experiment for 1 week where you evaluate customer acquisition rates with 100 people who have the intervention and 100 who do not.

- Compute the t-stat on the conversion rate difference between the two experiment groups.

What is an intervention?

An intervention is any attribute or lever you can filter on or pull that may impact your overall customer acquisition rates. This includes:

- Offering incentives like a price reduction, DoorDash gift card, personalized gift to drive customer acquisition.

- Filtering for specific attributes like "has >N mutual connections on Linkedin", "works in the Bay Area", or "company founded after 2021".

- And any other fork in the road you can think of when deciding what to do to acquire customers.

How about a real example?

As a founder in the early innings of pre-PMF, finding 100 people per experiment group to work with is possible primarily at the top- and middle- of the funnel. Recently, I wanted to concretely understand how much of a difference it makes to leverage the following interventions:

- Request to connect on Linkedin for profiles with a higher number of mutual connections. (Experiment #1)

- My hypothesis here is that profiles with many connections could indicate that I might actually run into this person in real life because we have so much overlap. Therefore, why not connect earlier?

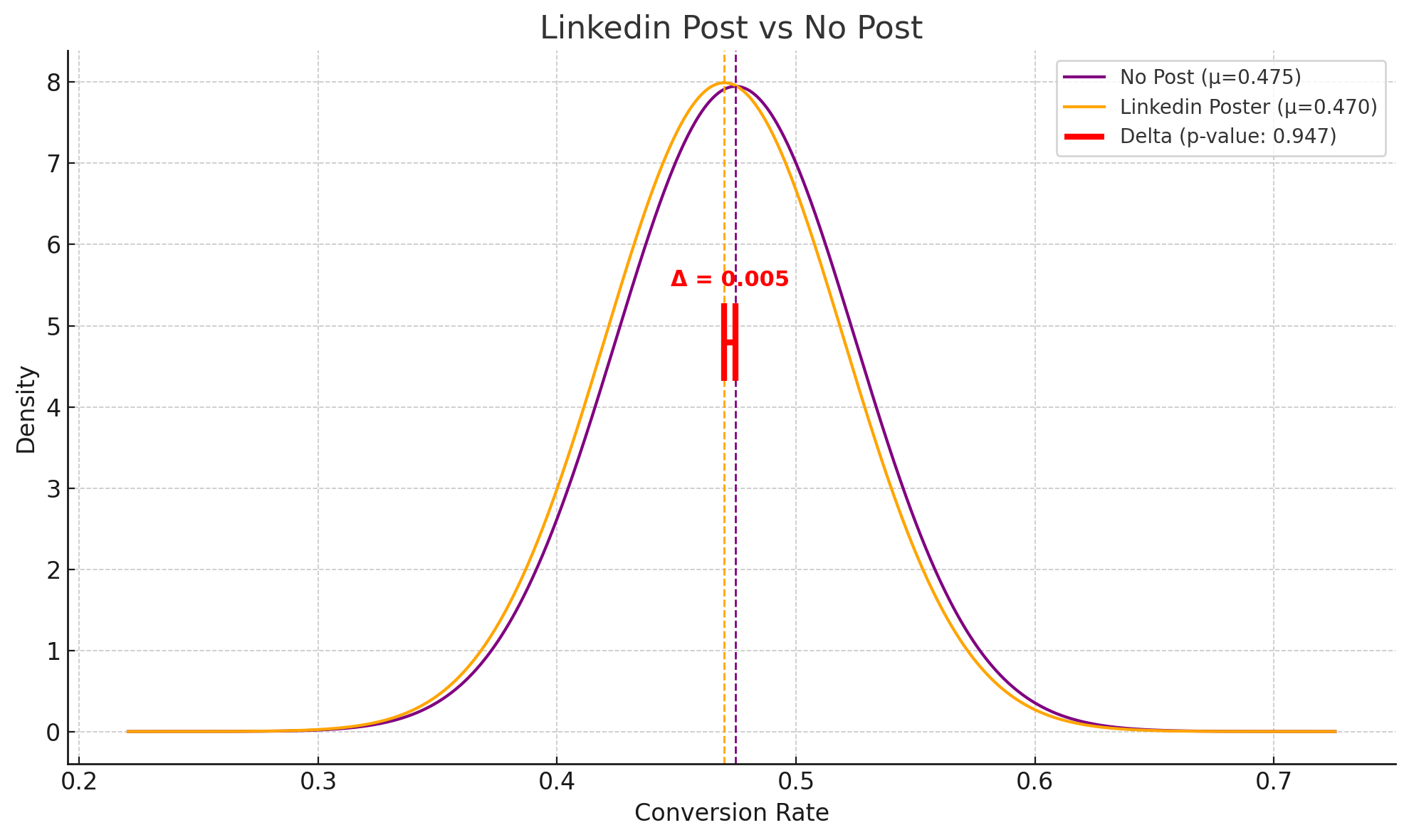

- Request to connect on Linkedin for profiles that make Linkedin posts. (Experiment #2)

- My hypothesis for Linkedin Posters is that I assume that people who post on Linkedin are interested in sharing their content with larger audiences. Therefore, they are biased toward accepting connection requests.

Note that I've already pre-filtered the audience I'm sampling from with a few attributes that I believe help with conversion like '2nd degree connections only' (although I may run experiments on these later).

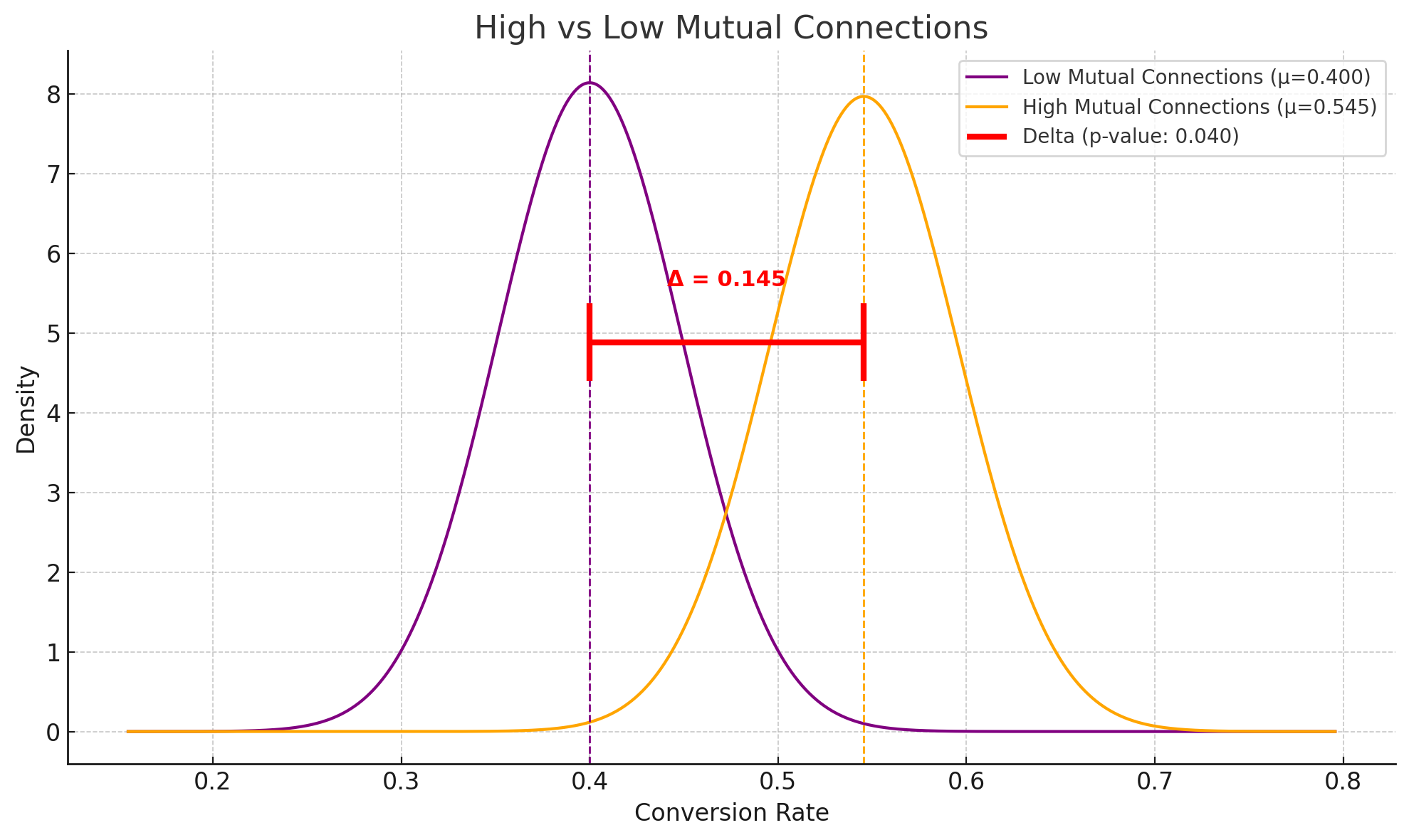

In Experiment #1

I determined specifically what "high number of mutual connections meant". I reviewed a handful of Linkedin profiles and arrived at:

- [Group A] 8+ mutual connections as my P80 number for "high number of mutual connections".

- [Group B] 4 mutual connections as my P50 number and therefore anything below that as "low number of mutual connections".

I then fetched 100 profiles with "high" mutual connections and 100 profiles with "low" mutual connections.

In Experiment #2

Going through profiles to find those with Linkedin posts was more straightforward and therefore I just had to fetch 100 profiles with a Linkedin post and 100 without.

Experiment Results

After reviewing the experiment results, I'm really excited about the outcomes. This emphasizes the point that not all advice is helpful for you specifically and you should be careful about your assumptions.

Experiment #1 had substantial, statistically significant lift. Comparing Group A to Group B showed an absolute conversion delta of 14.5% and a 36% lift on a relative basis! This suggests that, for myself, unless I have a good reason to reach out to someone with Low Mutual Connections, I should probably err on the side of spending my time with someone who's more connected with my profile.

This is a small detail that seems obvious in hindsight but it's not abundantly clear that this attribute, given its specific implementation, is something I should prioritize as I attempt to grow my Linkedin network.

Now, you're probably saying "Well duh, that's obvious. This is stupid, I don't need an experiment to figure that out."

But Experiment #2 is where things get interesting. Experiment #2 had no lift whatsoever. A p-value of 0.947 indicates that virtually nothing was different between the two groups over the course of the experiment.

Was this obvious? Not to me at least.

First of all, this means that the existence of Linkedin posts are not a significant differentiator when it comes to conversions with AI engineers in the United States that are a 2nd degree connection to my profile. This could be due to the age of the post, engineers not really caring about expanding their Linkedin network (whereas other personas might care), or the fact that I did not engage with their post prior to requesting to connect.

As a follow up, I'll probably want to further explore why this is the case. Or, if hunting down and engaging with Linkedin posts from AI engineers seems like too much of a time sink, I'll chase after other interventions first before coming back.

The Difference Made

Going forward, I have a concrete repeatable insight and can take advantage of my experiment findings. Using this insight compared to the status-quo suggests that I would convert at a 55% connection acceptance rate compared to a 47% acceptance rate. Although the experiment group with 8+ mutual connections had a much better lift than the experiment group with <4 mutual connections, I need to compare the lift to the state of the world prior to the experiment, which ran at a baseline conversion rate of 47%. So the final lift is approximately 17%.

Why didn't I make Group B in Experiment #1 the status-quo/previous baseline?

The real answer? I forgot 😄. But this actually worked out really well because a 36% lift was detectable given that we were working with 100 people per experiment group.

This brings me to a useful tip: When possible, try to run an 'exaggerated' experiment/intervention. We compared a group with a 'high' attribute value against a group with a 'low' attribute value. The benefit here is that we observe a large enough difference in outcomes such that our experiments pick up on the delta and we're able to achieve statistical significance that we would not have been able to otherwise.

Concluding Thoughts

Running a single experiment took roughly 1-2 hours of effort on a Sunday to assign experiment groups and 30 minutes to tally up conversions 1 week later. Going forward, I will be going all-in as much as possible on Linkedin profiles with higher numbers of mutual connections and will mostly abandon Linkedin posters as a target audience to filter on. A 17% lift in connect-rates would usually take 3 and 1/2 weeks of 5% week-over-week connection growth, so it's okay to not hit stat-sig results each week. But using this framework makes it pretty clear what strategies work and what doesn't. Best of all, I can keep exploiting this source of lift week-after-week with confidence because I know it works for me specifically.

Amidst the chaos of the hit-or-miss nature of posting content, mass emailing leads lists, and personal network intros, I believe small experiments like this are worth adding as a tool to allow founders to learn what concretely and repeatably drives growth every week.

If you've enjoyed the read, please drop a like or comment! If you're building in AI, we'd also love to connect and just learn about what you're working on!

Finally, if you could use some help running your own experiments, don't hesitate to reach out to me on Linkedin or at jon@dimred.com! I'd love to help.